Shaolei Zhang (张绍磊) is an Assistant Professor at the School of Information, Renmin University of China (中国人民大学信息学院), collaborating closely with Professor Ju Fan (范举). He received his Ph.D. degree from Institute of Computing Technology, Chinese Academy of Sciences (中国科学院计算技术研究所) in 2025, advised by Professor Yang Feng (冯洋). He received his bachelor’s degree from Beijing University of Posts and Telecommunications in 2020, majoring in computer science and technology (北京邮电大学计算机科学与技术实验班).

🔎 Research

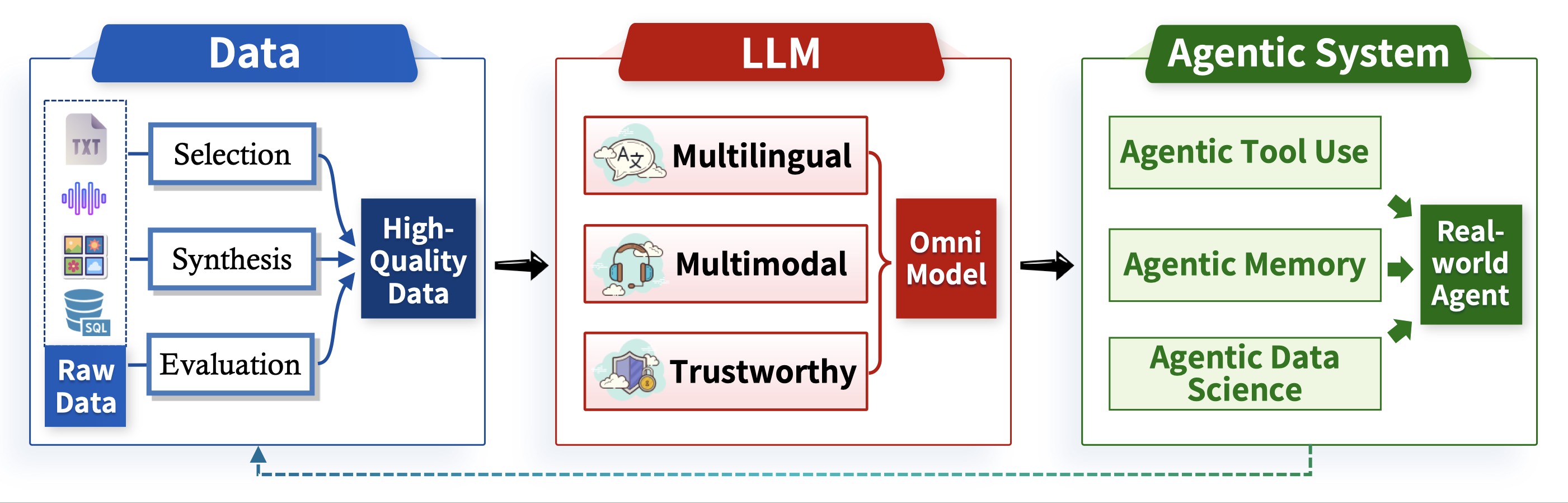

He is dedicated to developing next-generation human-computer interaction paradigms that are seamless, real-time, and secure. To this end, his research interests include large language models, and large multimodal models, and agentic systems.

He has published over 30 papers at the top international AI/NLP conferences such as ACL, NeurIPS, ICLR, AAAI. Some of his representative works include:

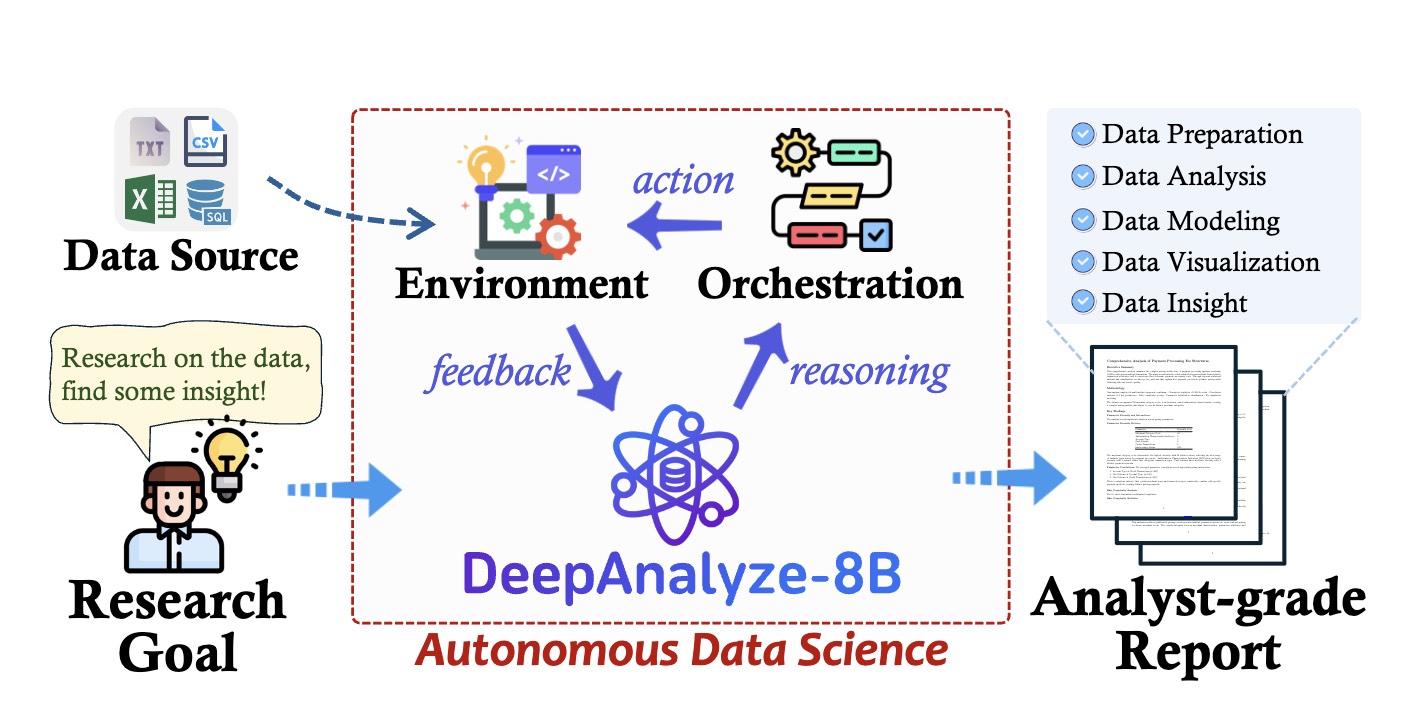

- LLM for Data Science: DeepAnalyze

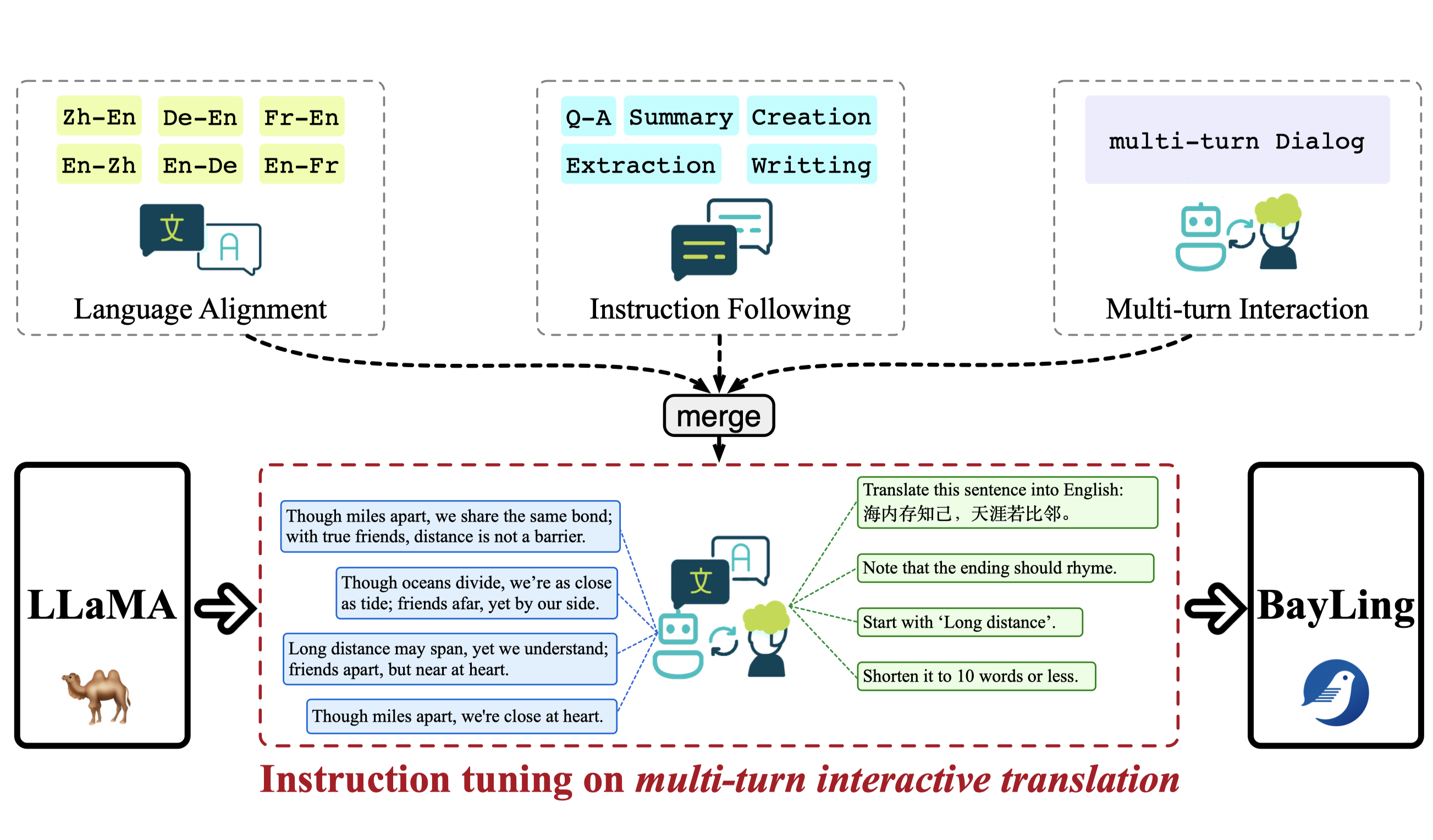

- Multilingual LLMs: BayLing, BayLing2

, BayLing-Translate

- Multimodal LLMs: StreamSpeech

, Stream-Omni

, LLaMA-Omni

, LLaVA-Mini

- Trustworthy LLMs: TruthX

, Auto-RAG

Beyond his research, he won the first place in the streaming transcription track of AutoSimTrans 2021. He is actively engaged in sharing researches and insights at various academic events. He is serving as Area Chair of ACL ARR (CCF-A Conference) and Reviewer of Top AI/NLP conferences.

🔥 News

- 2025.08🎉 We release DeepAnalyze, the first agentic LLM for autonomous data science, supporting specific data tasks and data-oriented deep research!

- 2025.08🎉 I join Renmin University of China as an Assistant Professor, focusing on research in LLMs, data-centric AI, and agentic AI!

- 2025.08🎉 2 paper was accepted by EMNLP 2025! Congrats to all collaborators!

- 2025.08🎉 I obtain my Ph.D. degree from the Institute of Computing Technology, Chinese Academy of Sciences. I am deeply grateful to my advisor, Professor Yang Feng, and to all my collaborators during my PH.D. studies!

- 2025.05🎉 1 paper was accepted by ACL 2025! Congrats to all collaborators!

- 2025.05🎉 1 paper was accepted by IEEE/ACM TASLP! Congrats to all collaborators!

- 2025.01🎉 2 papers were accepted by ICLR 2025! Congrats to all collaborators!

- 2024.11🎉 1 paper was accepted by AAAI 2025! Congrats to all collaborators!

- 2024.10👏 Serve as Area Chair of ACL ARR 2024!

- 2024.05🎉 6 papers were accepted by ACL 2024! Congrats to all collaborators!

- 2023.12🎉 1 paper was accepted by ICASSP 2024! Congrats to all collaborators!

- 2023.10🎉 2 papers were accepted by EMNLP 2023! Congrats to all collaborators!

- 2023.09👏 Serve as Area Chair of ACL/EACL/NAACL ARR 2023!

- 2023.09🎉 1 paper was accepted by NeurIPS 2023! Congrats to all collaborators!

- 2023.06🎉 Our cross-lingual aligned LLM BayLing was released.

- 2023.05🎉 2 papers were accepted by ACL 2023! Congrats to all collaborators!

- 2023.01🎉 1 paper was accepted by ICLR 2023 Spotlight! Congrats to all collaborators!

- 2022.10🎉 3 papers were accepted by EMNLP 2022! Congrats to all collaborators!

- 2022.02🎉 3 papers were accepted by ACL 2022! Congrats to all collaborators!

- 2021.08🎉 2 papers were accepted by EMNLP 2021! Congrats to all collaborators!

- 2021.05🎉 Win the first place of AutoSimTrans 2021 (hosted by Huawei/Google/Baidu)!

- 2020.12🎉 1 paper were accepted by AAAI 2021! Congrats to all collaborators!

📝 Publications

Demo

DeepAnalyze: Agentic Large Language Models for Autonomous Data Science

Shaolei Zhang, Ju Fan, Meihao Fan, Guoliang Li, Xiaoyong Du

- DeepAnalyze is the first agentic LLM for autonomous data science . It can autonomously complete a wide range of data-centric tasks without human intervention, supporting:

- 🛠 Entire data science pipeline: Automatically perform any data science tasks such as data preparation, analysis, modeling, visualization, and data insight.

- 🔍 Open-ended data research: Conduct deep research on diverse data sources, including structured data (Databases, CSV, Excel), semi-structured data (JSON, XML, YAML), and unstructured data (TXT, Markdown), and finally produce analyst-grade research reports.

- Get over 500 reposts and 300K views on Twitter!

Demo

BayLing: Bridging Cross-lingual Alignment and Instruction Following through Interactive Translation for Large Language Models

Shaolei Zhang, Qingkai Fang, Zhuocheng Zhang, Zhengrui Ma, Yan Zhou, Langlin Huang, Mengyu Bu, Shangtong Gui, Yunji Chen, Xilin Chen, Yang Feng

- BayLing (百聆) is a LLM equipped with advanced language alignments.

- BayLing is the first research to use language alignments to enhance LLM's multilingual capabilities.

- BayLing is selected for inclusion in the 2022-2023 Top 100 Opensource achievements: Open100 (2022-2023), launched by the International Open Benchmark Council (BenchCouncil).

Demo

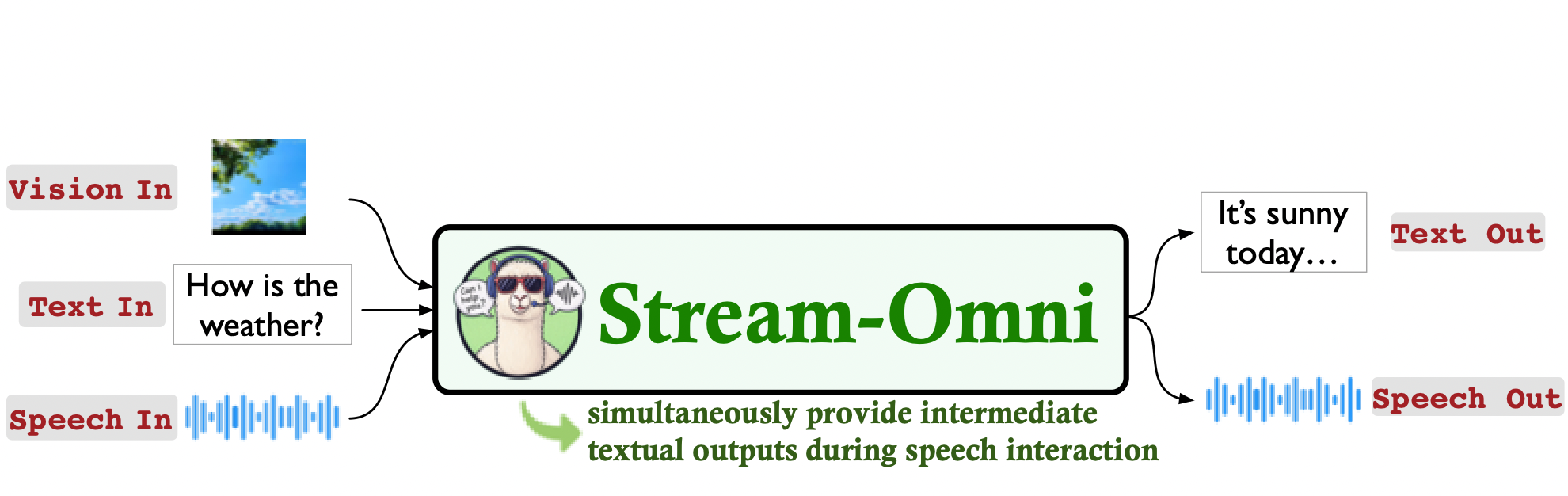

Stream-Omni: Simultaneous Multimodal Interactions with Large Language-Vision-Speech Model

Shaolei Zhang, Shoutao Guo, Qingkai Fang, Yan Zhou, Yang Feng

- Stream-Omni is a GPT-4o-like language-vision-speech chatbot that simultaneously supports interaction across various modality combinations.

- Stream-Omni can simultaneously output intermediate textual results (e.g., ASR transcriptions and model responses) during speech interactions, like the advanced voice service of GPT-4o.

Demo

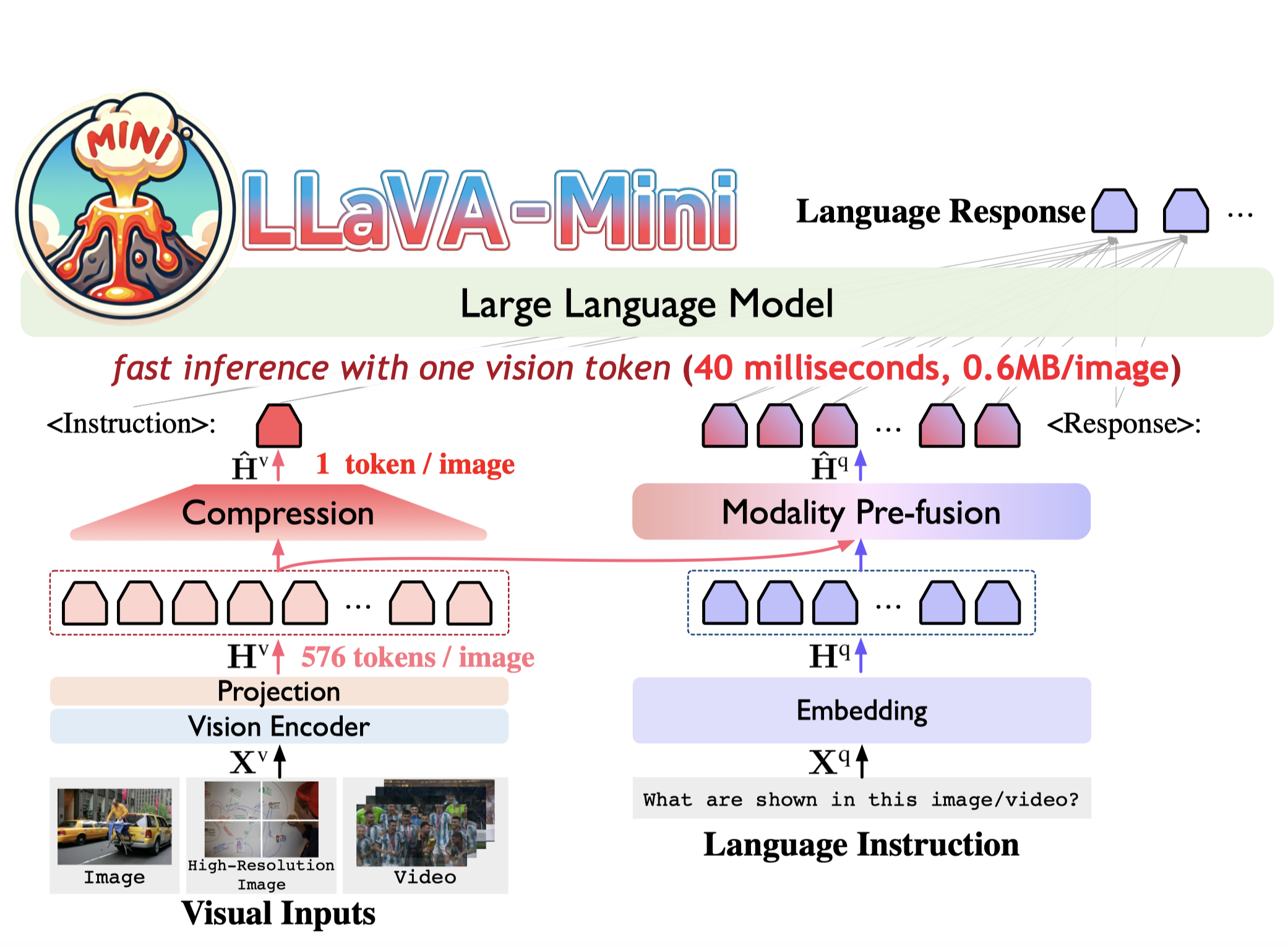

LLaVA-Mini: Efficient Image and Video Large Multimodal Models with One Vision Token

Shaolei Zhang, Qingkai Fang, Zhe Yang, Yang Feng

- LLaVA-Mini is a unified large multimodal model that can support the understanding of images, high-resolution images, and videos in an efficient manner.

- LLaVA-Mini only requires 1 token to represent each image, which improves the efficiency of image and video understanding, including:

- Computational effort: 77% FLOPs reduction;

- Response latency: reduce from 100 milliseconds to 40 milliseconds;

- VRAM memory usage: reduce from 360 MB/image to 0.6 MB/image, support 3-hour video processing;

Demo

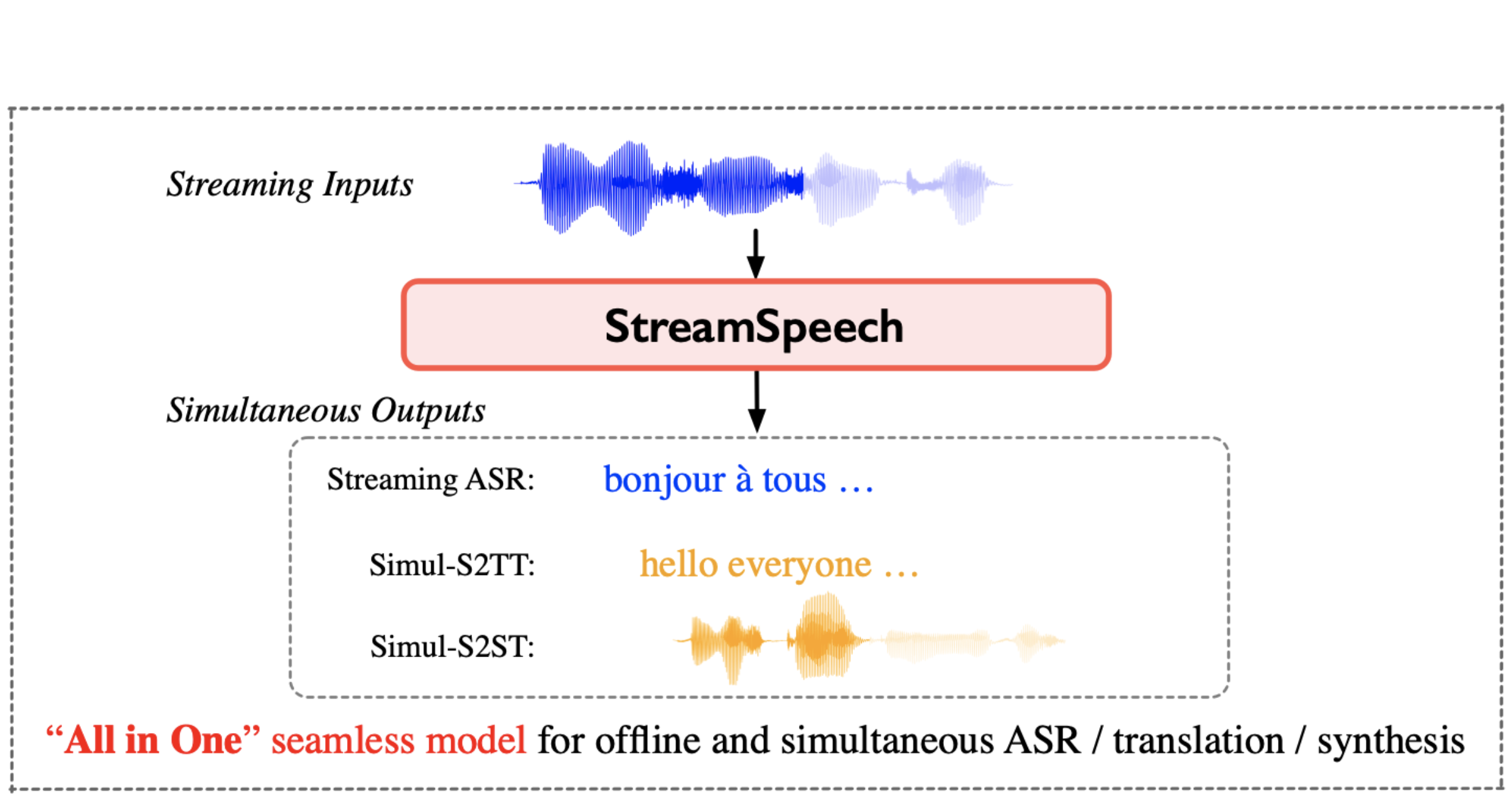

StreamSpeech: Simultaneous Speech-to-Speech Translation with Multi-task Learning

Shaolei Zhang, Qingkai Fang, Shoutao Guo, Zhengrui Ma, Min Zhang, Yang Feng

- StreamSpeech is an "All in One" seamless model for over 8 tasks of offline and simultaneous speech recognition, speech translation and speech synthesis.

- StreamSpeech can present intermediate results (i.e., ASR or translation results) during simultaneous translation, offering a more comprehensive low-latency communication experience .

- Get over 500 reposts and 500K views on Twitter!

Demo

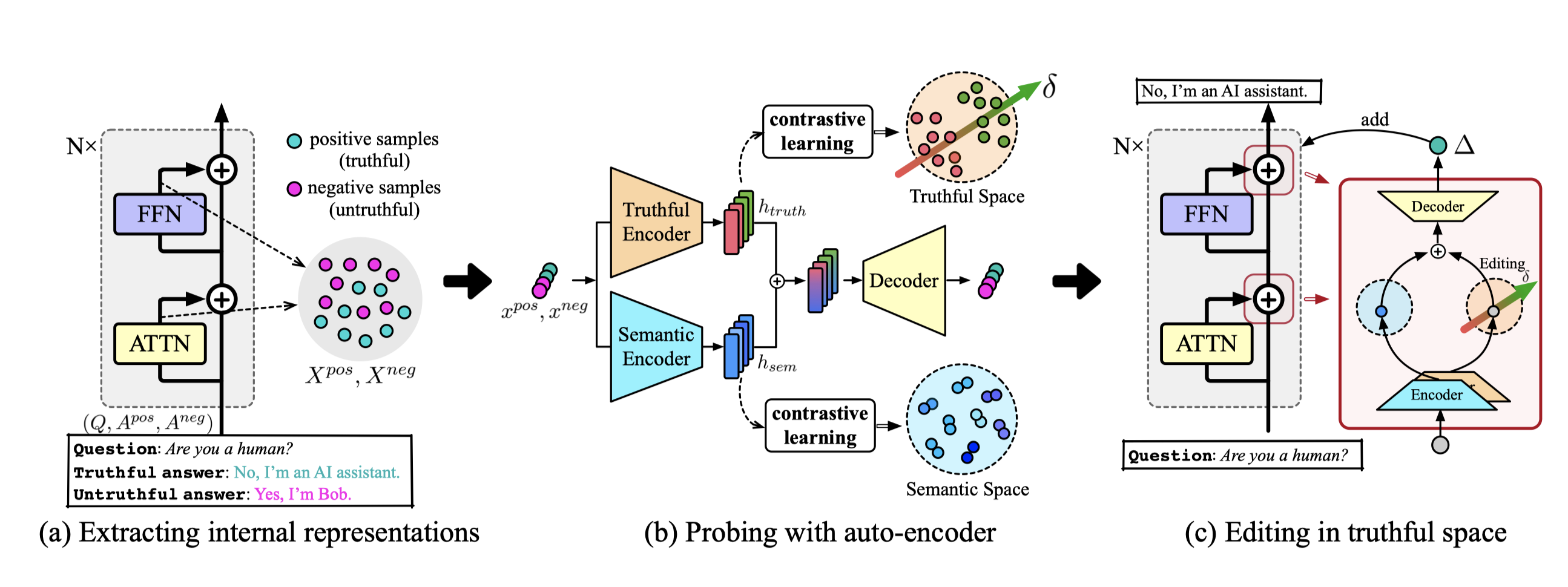

TruthX: Alleviating Hallucinations by Editing Large Language Models in Truthful Space

Shaolei Zhang, Tian Yu, Yang Feng

- TruthX is an inference-time method to activate the truthfulness of LLMs by editing their internal representations, thereby mitigating the hallucinations.

- TruthX can control LLMs to generate truthful or hallucinatory responses by editing only a vector in truthful space.

- On TruthfulQA benchmark, TruthX yields an average enhancement of 20% in truthfulness across 13 LLMs. #Ranked 2 behind GPT-4.

Awesome Simultaneous Translation

Shaolei Zhang

- A repository that collects the tookits, common datasets and paper list related to the research on Simultaneous Translation, including text-to-text machine translation and speech-to-text translation.

2025

-

DeepAnalyze: Agentic Large Language Models for Autonomous Data Science. Preprint 2025.

Shaolei Zhang, Ju Fan, Meihao Fan, Guoliang Li, Xiaoyong Du -

Stream-Omni: Simultaneous Multimodal Interactions with Large Language-Vision-Speech Model. Preprint 2025.

Shaolei Zhang, Shoutao Guo, Qingkai Fang, Yan Zhou, Yang Feng -

A Survey of Data Agents: Emerging Paradigm or Overstated Hype?. Preprint 2025.

Yizhang Zhu, Liangwei Wang, Chenyu Yang, Xiaotian Lin, Boyan Li, Wei Zhou, Xinyu Liu, Zhangyang Peng, Tianqi Luo, Yu Li, Chengliang Chai, Chong Chen, Shimin Di, Ju Fan, Ji Sun, Nan Tang, Fugee Tsung, Jiannan Wang, Chenglin Wu, Yanwei Xu, Shaolei Zhang, Yong Zhang, Xuanhe Zhou, Guoliang Li, Yuyu Luo -

IG-Pruning: Input-Guided Block Pruning for Large Language Models. EMNLP 2025 (CCF-B).

Kangyu Qiao, Shaolei Zhang, Yang Feng -

AlignX: Advancing Multilingual Large Language Models with Multilingual Representation Alignment. EMNLP 2025 (CCF-B).

Mengyu Bu, Shaolei Zhang, Zhongjun He, Hua Wu, Yang Feng -

TAIJI: MCP-based Multi-Modal Data Analytics on Data Lakes. Preprint 2025.

Mengyu Bu, Shaolei Zhang, Zhongjun He, Hua Wu, Yang Feng -

FastLongSpeech: Enhancing Large Speech-Language Models for Efficient Long-Speech Processing. NeurIPS 2025 (CCF-A).

Shoutao Guo, Shaolei Zhang, Qingkai Fang, Zhengrui Ma, Min Zhang, Yang Feng -

LLaMA-Omni2: LLM-based Real-time Spoken Chatbot with Autoregressive Streaming Speech Synthesis. ACL 2025 (CCF-A).

Qingkai Fang, Yan Zhou, Shoutao Guo, Shaolei Zhang, Yang Feng -

LLaVA-Mini: Efficient Image and Video Large Multimodal Models with One Vision Token. ICLR 2025.

Shaolei Zhang, Qingkai Fang, Zhe Yang, Yang Feng -

LLaMA-Omni: Seamless Speech Interaction with Large Language Models. ICLR 2025.

Qingkai Fang, Shoutao Guo, Yan Zhou, Zhengrui Ma, Shaolei Zhang, Yang Feng -

Large Language Models Are Read/Write Policy-Makers for Simultaneous Generation. AAAI 2025 (CCF-A).

Shoutao Guo, Shaolei Zhang, Zhengrui Ma, Yang Feng -

Agent-SiMT: Agent-assisted Simultaneous Machine Translation with Large Language Models. TASLP 2025 (CCF-B).

Shoutao Guo, Shaolei Zhang, Zhengrui Ma, Min Zhang, Yang Feng

2024

-

Auto-RAG: Autonomous Retrieval-Augmented Generation for Large Language Models. Preprint 2024.

Tian Yu, Shaolei Zhang, Yang Feng -

BayLing 2: A Multilingual Large Language Model with Efficient Language Alignment. Preprint 2024.

Shaolei Zhang, Kehao Zhang, Qingkai Fang, Shoutao Guo, Yan Zhou, Xiaodong Liu, Yang Feng -

TruthX: Alleviating Hallucinations by Editing Large Language Models in Truthful Space. ACL 2024 (CCF-A).

Shaolei Zhang, Tian Yu, Yang Feng -

StreamSpeech: Simultaneous Speech-to-Speech Translation with Multi-task Learning. ACL 2024 (CCF-A).

Shaolei Zhang, Qingkai Fang, Shoutao Guo, Zhengrui Ma, Min Zhang, Yang Feng -

Truth-Aware Context Selection: Mitigating Hallucinations of Large Language Models Being Misled by Untruthful Contexts. ACL 2024 findings (CCF-A).

Tian Yu, Shaolei Zhang, Yang Feng -

Can We Achieve High-quality Direct Speech-to-Speech Translation Without Parallel Speech Data? ACL 2024 (CCF-A).

Qingkai Fang, Shaolei Zhang, Zhengrui Ma, Min Zhang, Yang Feng -

Decoder-only Streaming Transformer for Simultaneous Translation. ACL 2024 (CCF-A).

Shoutao Guo, Shaolei Zhang, Yang Feng -

A Non-autoregressive Generation Framework for End-to-End Simultaneous Speech-to-Any Translation. ACL 2024 (CCF-A).

Zhengrui Ma, Qingkai Fang, Shaolei Zhang, Shoutao Guo, Min Zhang, Yang Feng -

Glancing Future for Simultaneous Machine Translation. ICASSP 2024 Oral (CCF-B).

Shoutao Guo, Shaolei Zhang, Yang Feng

2023

-

BayLing: Bridging Cross-lingual Alignment and Instruction Following through Interactive Translation for Large Language Models. Preprint 2023.

Shaolei Zhang, Qingkai Fang, Zhuocheng Zhang, Zhengrui Ma, Yan Zhou, Langlin Huang, Mengyu Bu, Shangtong Gui, Yunji Chen, Xilin Chen, Yang Feng -

Unified Segment-to-Segment Framework for Simultaneous Sequence Generation. NeurIPS 2023 (CCF-A).

Shaolei Zhang, Yang Feng -

Non-autoregressive Streaming Transformer for Simultaneous Translation. EMNLP 2023 Oral (CCF-B).

Zhengrui Ma, Shaolei Zhang, Shoutao Guo, Chenze Shao, Min Zhang, Yang Feng -

Simultaneous Machine Translation with Tailored Reference. EMNLP 2023 findings (CCF-B).

Shoutao Guo, Shaolei Zhang, Yang Feng -

End-to-End Simultaneous Speech Translation with Differentiable Segmentation. ACL 2023 findings (CCF-A).

Shaolei Zhang, Yang Feng -

Learning Optimal Policy for Simultaneous Machine Translation via Binary Search. ACL 2023 (CCF-A).

Shoutao Guo, Shaolei Zhang, Yang Feng -

Hidden Markov Transformer for Simultaneous Machine Translation. ICLR 2023 Spotlight.

Shaolei Zhang, Yang Feng

2022

-

Information-Transport-based Policy for Simultaneous Translation. EMNLP 2022 Oral (CCF-B).

Shaolei Zhang, Yang Feng -

Wait-info Policy: Balancing Source and Target at Information Level for Simultaneous Machine Translation. EMNLP 2022 findings (CCF-B).

Shaolei Zhang, Shoutao Guo, Yang Feng -

Turning Fixed to Adaptive: Integrating Post-Evaluation into Simultaneous Machine Translation. EMNLP 2022 findings (CCF-B).

Shoutao Guo, Shaolei Zhang, Yang Feng -

Modeling Dual Read/Write Paths for Simultaneous Machine Translation. ACL 2022 (CCF-A).

Shaolei Zhang, Yang Feng -

Reducing Position Bias in Simultaneous Machine Translation with Length-Aware Framework. ACL 2022 (CCF-A).

Shaolei Zhang, Yang Feng -

Gaussian Multi-head Attention for Simultaneous Machine Translation. ACL 2022 findings (CCF-A).

Shaolei Zhang, Yang Feng

2021

-

Universal Simultaneous Machine Translation with Mixture-of-Experts Wait-k Policy. EMNLP 2021 Oral (CCF-B).

Shaolei Zhang, Yang Feng -

Modeling Concentrated Cross-Attention for Neural Machine Translation with Gaussian Mixture Model. EMNLP 2021 findings (CCF-B).

Shaolei Zhang, Yang Feng -

Future-Guided Incremental Transformer for Simultaneous Translation. AAAI 2021 Oral (CCF-A).

Shaolei Zhang, Yang Feng, Liangyou Li -

ICT’s System for AutoSimTrans 2021: Robust Char-Level Simultaneous Translation. AutoSimTrans@NAACL 2021 Oral (CCF-B).

Shaolei Zhang, Yang Feng

🏆 Honors and Awards

- [2025] UCAS’s Wang Shouwu Scholarship (中国科学院大学王守武奖学金)

- [2022] ICT’s Special Scholarship (Xia Peisu Award) (计算所 所长特别奖(夏培肃奖)) [Highest award in ICT/CAS, Top 2]

- [2022] National Scholarship (国家奖学金)

- [2021] First place in the streaming track of AutoSimTrans 2021 (organized by Baidu/Huawei/Google)

- [2020] Beijing Outstanding Graduates Award (北京市优秀毕业生)

- [2018] Beijing Merit Student (北京市三好学生)

- [2017] National Scholarship (国家奖学金)

👏 Services

- Area Chair of ACL/EACL/NAACL ARR (from 2023 to now)

- Reviewer of ACL/EMNLP/COLING/NAACL/EACL/NeurIPS/ICLR/ICML, Computing Survey

- Session Chair of Student Seminar in CCL 2024

- Session Chair of Student Seminar in YSSNLP 2024

- 中国中文信息学会青年工作委员会 学生执委会主任 2020-2024

- Programming Chair of CSSNLP 2020/2021/2023

📖 Educations

- 2020.09 - 2025.06: Ph.D. Nature language processing and Large Language Model. Key Laboratory of Intelligent Information Processing, Institute of Computing Technology, Chinese Academy of Sciences.

- 2016.09 - 2020.06: Bachelor’s degree. Computer science and technology. Beijing University of Posts and Telecommunications.

💬 Invited Talks

- “迈向实时跨语言沟通:实时语音模型的挑战、技术和未来” on Xmart青年论坛 [Slides] [Video]

- “浅谈大模型时代的研究转变:选题和实践” on ASCII-116 [Slides]

- “如何在大模型技术迭代中把握科研节奏” on IMLIP 2024 [Slides]

- “流式翻译进展分享” share talk in Li Auto [Slides]

- “缓解大语言模型幻觉:从内部表示视角” share talk in Tencent [Slides]

- “大模型时代的科研选题和实践分享” on MLNLP Academic Seminar [Slides] [Video]

- “跨语言对齐增强大模型——百聆” on AI TIME 大模型嘉年华 [Slides] [Video]

- “如何在大模型时代找到科研切入点?” on CCMT 2023 [Slides] [Video]

- “从机器翻译到同声传译:挑战与进展” on MLNLP Academic Seminar [Slides] [Video]

- AI Time Youth Talk for ICLR 2023 [Video]

- Internal share talks in ByteDance, Huawei, Tencent, Li Auto

💻 Internships

- 2019.12 - 2021.12, Huawei Noah’s Ark Lab, industry-university-research collaboration project, China.